Modernization of our first datacenter “Bunker” started last October. It was a long-planned project based on the newly gained experience with the DC2 construction and especially with the currently ongoing TIER IV certification. We had to start thinking differently, find new technologies and also adapt to the technological trends of today and the near future.

How we rebuilt the data hall in DC1

If you’ve ever been on a field trip with us, you might have seen a neat data hall with seagulls, dominant Freecooling and other technologies on the sides. Just a regular data room.

This is what he looked like in 2015.

In 2017, we bought more gulls from HPE and stretched the aisle.

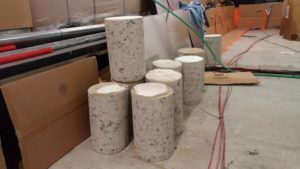

In 2018, the modernisation began. Extensive drilling work was carried out in the building to prepare everything for the expansion of the existing technology. Large holes were added everywhere for pulling cables, freecooling parts, air conditioners, and new doors appeared, while others were bricked up. The inside, outside and inside out has changed.

Freecooling

And we were able to start rebuilding freecooling, which is not only environmentally friendly, but also reduces our operating costs. Servers produce a lot of heat and it is now very expensive to cool everything with air conditioners. Rising electricity prices can also affect the price of services.

Freecooling, on the other hand, cools either completely or only partially by heat from the outside. We built our Freecooling ourselves. They bought the parts from local manufacturers. So we know him very well. Based on the data collected and the experience of the past years, we decided to improve it.

So the original version from 2015:

It was expanded in March 2019 as follows:

Then in April 2019, into this giant monster:

The entire technology is then separated from the servers by a firewall. The fire resistance of our first datacentre is entirely designed for 30 minutes. The second datacenter is one step further and is being built with 90 minutes of resilience!!!

At the same time, the whole Freecooling was stretched into another room where we used to have a laboratory for testing oil cooling. We had to cancel it completely. Further tests will be carried out in the second datacentre.

We don’t have a lot of room left, do we :)? We like it this way, even though the data room looks like the inside of a spaceship. WEDOS DC1 “Bunker” is our private datacenter dedicated to our services only. We know where we have what and how it works.

One more interesting thing. Freecooling is like a big fan that can push huge amounts of air. We use the principle of warm and cold aisle – cold air is pushed under the floor to the servers in the cold aisle, where the servers suck it in and let it out the back to the warm aisle. With Freecooling, we are able to send huge amounts of pressurized air (up to 70,000 m3 per hour) into the cold aisle when needed.

We took advantage of this when we discovered local overheating of components in one server component. The fans on the server reduced the speed at a certain low temperature, even though the component was overheating. The server felt that it was cool and therefore fine, so it reduced the fan speed and thus some components inside the server had a problem. Before the manufacturer solved it with a firmware update, we simply pushed so much air into the servers that the component cooled down even at the current temperature.

That’s why we are big fans of Freecooling.

Electricity

Last year we also connected our new substation from the north. Our data centre DC1 is still powered from the south side, where we have cables stretched to the e.ON substation.

And they’ve added two new motor-generators in case of a long-term outage.

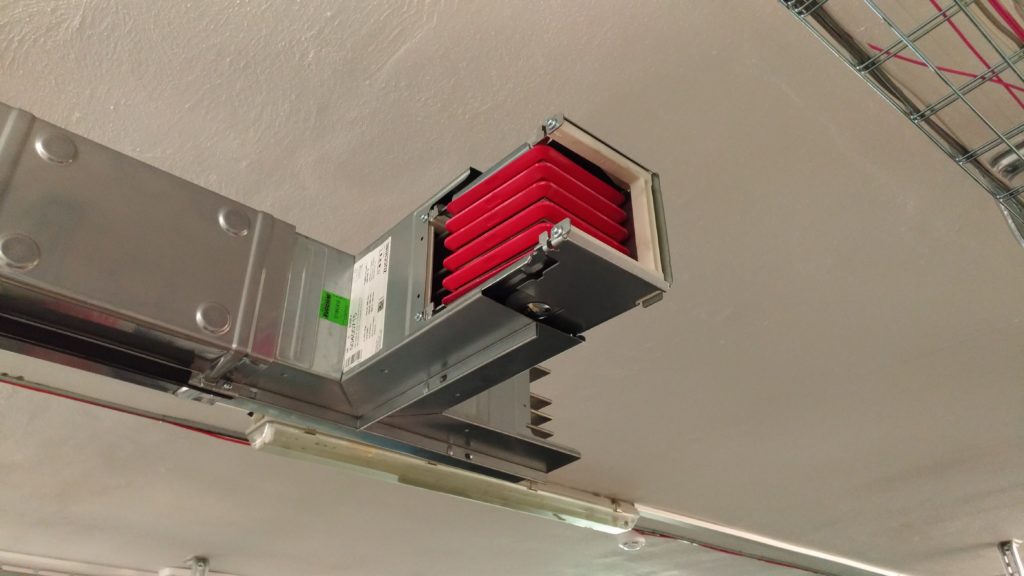

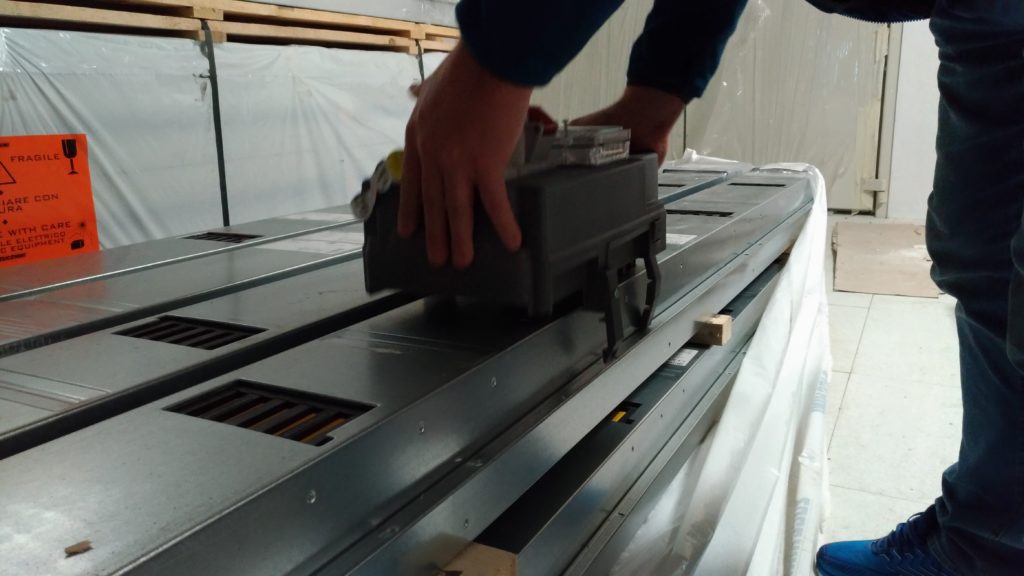

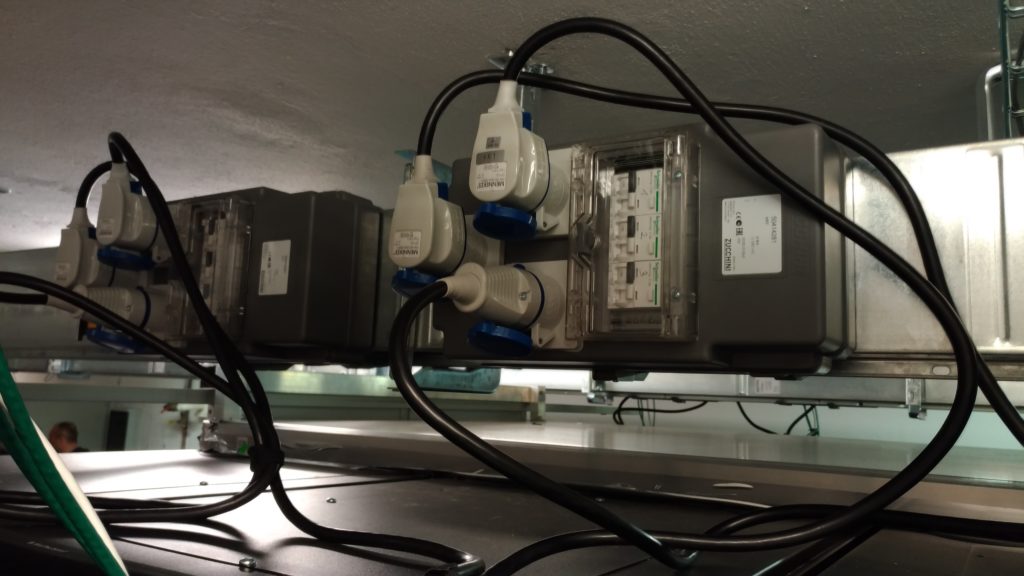

At the same time, the rebuilding of the first branch of electricity (under the ceiling) began. It includes replacing the classic electrical cables in the server room with a busbar system. We will add the same solution under the floor this year and we already have the same solution in the second data centre DC2.

Just for the record. Although it would have been cheaper and easier to run both electricity lines under the ceiling or under the floor at the same time, we decided to follow TIER IV and have one branch under the ceiling and the other under the floor. TIER IV directly requires it. We know from long experience that it has something to it and it is worth investing in.

The busbar system is built for 800 A below the ceiling and 800 A below the floor. The fire resistance of the busbar system is a minimum of 120 minutes.

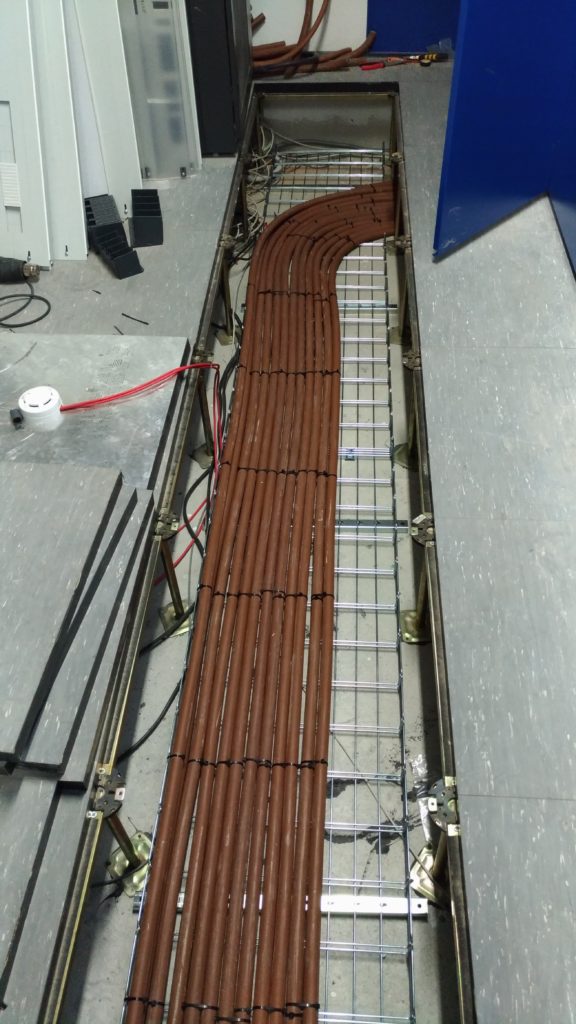

All electrical cables are also gradually being replaced with non-flammable (brown) ones. TIER IV inspiration again 🙂

Flame retardant, flame retardant cables that do not produce fumes are many times more expensive than conventional cables. About 5 times more expensive.

You can see in the picture that there are quite a lot of cables. We assume that everything is designed for operating temperatures of 40 degrees Celsius anywhere in the building. That’s why there are always a few more cables… than there would be classically and than you would find in a regular datacenter. TIER IV inspiration again.

You won’t find many such installations in Czech datacentres. It’s a huge cost…

And you only see one part, one branch in the pictures. Other wiring is in the building.

Next time we’ll show you the electricity switchboards so you can see what it looks like…

Connectivity

We are gradually increasing connectivity both in number and speed. Last time we added 100 Gbps to Cogent. Currently DC1 is connected:

Physical Routes:

- R2 (Cetin) 100 Gbps to SITEL (CeColo)

- R2 (Cetin) 100 Gbps to SITEL (CeColo) via another route

- R1 (CDT) 100 Gbps to Žižkov tower of CRa

And we have a connection now:

- Cogent 100 Gbps (launched in April 2019)

- Kaora 100 Gbps on Sitel

- Kaora 100 Gbps on Žižkov Tower

- Telia 100 Gbps via Kaory port

- Telia 10 Gbps direct

- CTD 10 Gbps

We have now arranged 2 more suppliers.

Everything is really oversized. Firstly, so that we can offer 1 Gbps to some current (Dedicated Servers) and future (WEDOS Cloud) services, but also so that we can better withstand the increasing number and strength of DDoS attacks. New or improved forms are emerging and these need to be brought to us so that we can better analyse them.

This year, the largest recorded attack was 37 Gbps and was directed at the VPS of one of our customers. Before the deployment of 100 Gbps connectivity, this kind of attack would have been different and more difficult to deal with. But we certainly couldn’t filter it.

Other improvements

Of course, other improvements are also part of the modernisation. If we don’t include hardware, we have, for example, custom-made junction boxes, new batteries or rather a complete battery room. The rooms are divided according to purpose and fire separated or we have put new UPS in the offices. But we’ll write about that next time.

There are a lot of improvements and this is still the first part of the modernization. In the next the second branches will be redesigned. We are not yet on time because we need to complete the second datacenter at the same time. A lot of the work is done by our datacenter administrators who, for example, pull electrical cables. However, they must have a complete overview of each construction job. We like to keep everything under control so that when something happens, we know exactly what happened and where.

We will gradually write more articles about the current datacenter and the newly built one.