We are not afraid to write that our second datacentre will be one of the Czech technological uniquenesses of which we can all be proud. All servers in it will be cooled in an oil bath. This cooling technology is incomparably more economical than air cooling. In addition, it will allow us to move the excess heat to the nearby municipal swimming pool, where we will use it to heat the pool. This way, the bulk of the waste heat is recovered instead of being vented outside.

Future technology that has been with us for a long time

Cooling live technologies with demineralised oil is nothing new. It is used, for example, in transformer stations.

Air is essentially a thermal insulator. Oil conducts heat many times better. If you took your home PC, put it in a big enough tub and poured oil over it, then passive coolers would be fine. Of course, in real life, the room would need to be kept at a constant temperature. There are plenty of how-to videos on YouTube 😉

Water could also be used for cooling, but it gradually becomes conductive (e.g. due to dust) and would be a problem for both servers and operators. The oil we use is non-conductive and has tremendous insulating properties in terms of electricity and short circuits. On the other hand, it has excellent thermal conductivity and low viscosity. Moreover, it is clear, odorless and even used in the pharmaceutical industry.

So why hasn’t anyone tried oil cooling on servers before? In fact, it was tried in universities as ecological experiments. Some places have had fairly large installations in it for several years. They have one such installation in Vienna, for example, where they have several thousand servers drowned in oil. There are also companies that offer oil cooling commercially and supply it directly to order.

However, there are many obstacles in the way of greater commitment. First of all, you need a dedicated datacentre for this. The heat from the oil baths needs to be taken away somehow and you need to “get rid” of that heat all year round too (the city swimming pool is ideal for this). A water circuit is ideal for heat dissipation. Well, water is the last thing you want in a data hall. The design is also not exactly simple and cheap.

The hardware itself is also a big problem. Firstly, you have to modify it for passive cooling in an oil bath (remove fans and obstructions to improve airflow), which involves technical intervention and firmware modification. But if you take away the fans, you save up to 30% of the server’s electricity consumption on average, because that’s how much the fans consume.

You only need 1-3% of the energy to cool yourself with oil. Compared to conventional modern datacentres, it is about 10 times less. If you want to compare it to older datacenters, it’s 20-40x less energy used for cooling! Not to mention that (as mentioned in the previous paragraph) server consumption is tens of % lower just because of the need to turn off (remove) the fans.

Furthermore, complaints and service are also a problem. Try to imagine how you will advertise PC components from oil 🙂 But we can solve that too. We have tested chemicals that help to degrease servers perfectly. So you practically can’t tell that there was ever a server in the oil. We have been dealing with this in recent years as well.

As you can guess, it’s about big investments, negotiations with suppliers and of course many years of development and testing. This is a large financial and time cost that not many companies can afford in the market. Multinationals, on the other hand, prefer to build their datacentres far to the north, where they can use more advanced air cooling methods more effectively.

First a summary and some statistics

Datacentre WEDOS 2, aka “The Vault”, is a monolithic reinforced concrete structure with walls 30 to 110 cm thick. Part of the data hall is on a slope – partly underground.

Inside there are 10 racks for 120 oil vans version 4.3. In these tubs will be HPE Moonshot server cabinets, each with 45 servers. In total, DC WEDOS 2 is built for 10,800 physical servers.

In the picture you can see one such stand. It will literally be a data warehouse. One level of oil baths will be near the floor, the second in the middle and the third below the ceiling. So, like in a warehouse with some material. Simple warehouse racks with a large load capacity and shelves on them and somewhere they store material and for us data.

The data room is accessed by a real vault door, almost 10 cm thick, which is actually certified as a vault door. The door is triple skinned with a 73mm compact layer made up of a special high strength compound that is reinforced with high strength reinforcement. Hardened, drill-resistant plates are placed under the locking mechanisms. They correspond to III. security class, but are only certified to II. class to save money. Overall, they meet the NBU 4 certification for Top Secret. The total weight is 1000 kg.

The same door leads to the data centre building itself. So a would-be intruder would have to overcome two special security vault doors before getting into the actual technology section. Believe it or not, if you want to go from an office building to a datacenter, you must first go through a “normal” security door (called an armor door) that is used in regular commercial datacenters. These doors are also fire-stopped with a fire resistance rating of 90 minutes.

Before you enter, you have to go through the security door and then the vault door and one more vault door and finally you will see the servers. And we have to add that the door can be opened by putting your hand on the bloodstream reader, attaching a security card and the whole process has to be confirmed by a second person from our other data centre.

For DC WEDOS 2, we officially applied for TIER IV Operational Sustainability certification from the Uptime Institute in May 2017. The certification process (replacement and completion of project documentation) is currently underway. So far we haven’t encountered any major problems. However, it is not easy. It is literally in a different league than ordinary datacentres, both in terms of design and technological background.

The datacenter is connected to the e.ON substation, which is located on the neighbouring property, about 1 meter from our fence. A hydroelectric power station is also connected to this substation – to the same terminal block, which is able to provide power to 100% of the capacity of our datacenter. So the electrons from the hydroelectric plant primarily “run” to us.

A total of 5 motor-generators will take care of the electricity in case of a problem. 3 are inside the DC2 building and 2 are on the roof. These are special motor-generators designed for continuous operation. Due to TIER IV, the commonly used motor-generators cannot be used as a backup. Each of the motor-generators can handle the operation of the datacenter by itself. Each has diesel tanks for 12 hours of operation and all can be refilled on the fly without access to the datacenter.

One motor-generator inside backs up the main power supply from the substation. If the substation and this generator fails, we still have 4 more motor generators. There are 2 motor-generators on each supply branch (one under the ceiling and one under the floor) (one inside the building and one on the roof). Each branch will power 100% of all servers and their cooling and other technological components required for operation. So there are 2 motor-generators on each branch and they are in 1+1 mode. As a result, we have multiple backups. The substation and 4 generators could fail and we would still be operating. Respectively, if the substation and 3 motor generators fail, we can safely service them because we will still have N+N backup.

Another interesting feature of motor-generators is that they are designed differently. They are 2 different models of engines and generators to avoid manufacturing defects and have different control units. They are also differently engineered and cooled. The first generator inside is cooled by air from outside (it has its own radiator on the roof of the building). The second inside is cooled by its own air conditioning. The third is water cooled along with the servers. There are back-up generators on the roof and all are air-cooled as standard.

WEDOS DC2 will be connected to the internet via 3 different 100 Gbps routes and operators. This prevents risks with connectivity and dependence on one of the providers. One of the routes will go through Austria, in case Prague is deleted from the internet. In addition, WEDOS DC2 will be connected to WEDOS DC1, which also has 3x 100 Gbps out through 2 different operators (CTD and CETIN), specifically leading through 3 different physical routes (Písek, Tábor, Jihlava) to 2 different locations in Prague (THP, CeCOLO). So one DC will be able to use the connectivity of the other in an emergency.

In Prague, we now have 2 x 100GE connections (in different locations in Prague) to one supplier who supplies us with connectivity to other locations. We also have 100GE directly into one multinational network. And then 2 x 10GE to two other providers, but in March these two connections will be increased to 2 x 100GE.

And now for what’s new with us

Everything is on track. The biggest problem at the moment is probably the tangled flag again 🙂

The junction boxes gave us a bit of trouble. As it turned out, there are no people and companies willing to make such cabinets without supplying the material (we tendered it ourselves), so we had to wait for them. We currently have them and our electricians are working on the wiring.

Classic (non-flammable) cables run from the junction boxes and will be connected to the busbar system. This is a very elegant and safe solution that allows us to create a socket wherever we need it.

We use flame retardant cables honestly for everything and everywhere. Those are the brown ones in the pictures. Everything is connected by these cables. They don’t burn, they don’t produce fumes, they don’t spread fire, they don’t spread any harmful gases… Just safety first. Cables are extremely expensive (about 4-5 times more expensive than conventional ones). Everything is calculated according to the standards (including the fact that the ambient temperature can be up to 40 degrees Celsius) plus a reserve.

We don’t use contactors, we use ATS. Everything is technologically quite simple (there is power in simplicity) and the circuitry is in a mode that if the primary power supply to the ATS fails, the ATS switches to the backup. When the primary source is restored, everything is switched to the primary source. It is a safer solution and ready for decades. We deliberately did not want to use contactors that are used in most datacenters. Their lifetime is limited, the number of switching cycles is lower and there are risks of possible catastrophic consequences in case of a problem.

Another interesting feature of the switchboards is that one branch is composed of components from one manufacturer and the other branch is completely made of components from another manufacturer. Again, this prevents the risk of manufacturing defects…

Of course, one supply branch is in a different part of the building and they are sufficiently fire separated. A minimum of 90 minutes resistance is throughout the datacenter.

One branch of electricity runs under the double floor and the other under the ceiling. They don’t meet or see each other anywhere. Everything is thoroughly separated. The fire resistance of all penetrations is a minimum of 90 minutes, but the busbar system, for example, is certified for 120 minutes.

Between the switchboards, generators and the busbar system with the servers are embedded UPS, but more about that next time.

Many structural modifications have been made to the roof to provide cooling for the generators. When the airmen saw what kind of motor generators we were planning to have there, they were a bit more demanding 🙂

You see, a few bugs crept in and we had to use brute force.

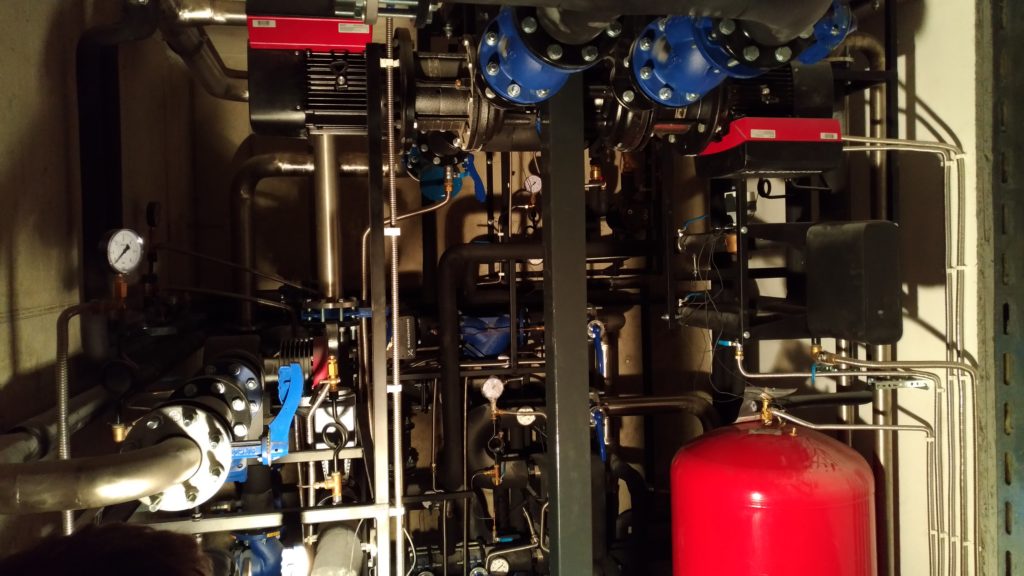

The most interesting part is the water cooling, respectively. its management. It is a total of two separate rooms, one circuit in each. After many months we can show you the first completed room. Only little things will be added. All technology is along the sides and on the ceiling. The operator can only walk around the iron column. There’s not a lot of room, it looks like a submarine.

The second room is also nearing completion. There’s a bit more technology in it, so there’s more space.

One cooling branch is distributed under the ceiling and the other under the floor. They don’t meet anywhere and everything is fireproofed again for 90 minutes. Each branch has several cooling options and each has redundant pumps so that each can be serviced without downtime. Cooling is therefore in 2 x (N+1) mode.

As far as the data room itself is concerned, the work is mainly under the floor, but there is a copy of everything under the ceiling. Everything is twice. Once downstairs and once upstairs. All without crossing, without concurrence. There are literally miles of cables being pulled.

Since the construction work is already finished, the cleaning will soon be able to start. We would like to start the test operation as soon as possible. Next week we will bring the first oil bath.

Conclusion

Although everything is moving towards the goal, a few things are still missing. However, test traffic will not mind. We’ll keep you posted as we go along, and we expect to be blowing up and plugging in the fiber optic routes and sinking the first servers into the first bathtub and testing in April.