We have an interesting chart for you today. In October we could enjoy warm sunny days, but also very cold and cloudy. This is beautifully reflected in our graph of electricity consumed in DC1.

We also attach a graph of temperatures in Hluboká Nad Vlatvou, where we have our headquarters, DC1 and we are building DC2.

If you look at the peaks, you can see when our freecooling had to be helped by air conditioning and electricity consumption increased. And in the second half of the month it was colder and so we operated without air conditioning.

Freecooling vs air conditioning

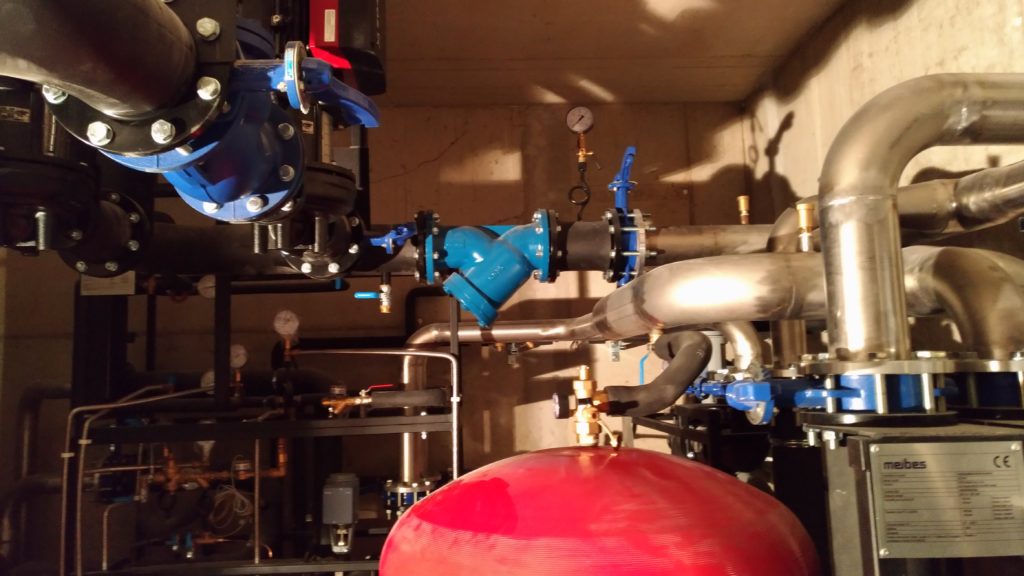

Currently in DC1 our main cooling is freecooling. This is so-called direct freecooling. This mixes the cool air from outside (cleaned through fine filters) and the warm air inside to achieve the ideal temperature. Significantly reduces operating costs. Only with this freecooling can we keep the entire datacenter cool for most of the year. However, when the temperature outside is warm (over about 23 degrees), we use one of the air conditioners to freecool. If it’s over 27 degrees outside, we have to switch completely to air conditioning, and that’s when we make our electricity suppliers happy 🙂

The servers could withstand up to 33 degrees. They are certified for this, but we have tested that at temperatures as low as 27 degrees there is a significantly higher failure rate of hard drives.

To cool approx. 125 kW of heat with freecooling we need 2 – 13 kW of electricity. It depends on the outside temperature, whether it is day or night, cloudy or sunny, etc. With air conditioning we need up to 50 kW.

Freecooling can do other things too. For example, servers are very susceptible to temperature fluctuations. With freecooling, the temperature in the server room can be kept to tenths of a degree very efficiently.

Since you are working with some 50,000 cubic meters of air per hour, you can increase the pressure very easily. Cooling is not just about the temperature of the air, but how much you can push through the seagulls. So you can cool even what is very difficult with air conditioners. But then you might have floorboards jumping up and down 🙂

We had Freecooling customized to our designs in 2013. At that time it cost us about one million crowns. Within the first year, we recovered our costs. Today we have much higher consumption than then, so we had it expanded as part of the 2018/19 Modernisation event.

A large part of the freecooling is upstairs where we have offices and leads to the roof. It originally led to the yard where the intake and exhaust were. As we grew, however, the air in the yard warmed considerably and the warmed air was sucked in.

In 2017 we made a modification where we extended the exhaust to the roof. There has been an increase in efficiency.

Air Conditioning

We run air conditioners as little as possible. It’s the most expensive cooling method.

Until the upgrade, we only had air conditioning on the roof.

As part of the 2018/19 DC1 upgrade, four air conditioning units have been moved behind the data centre where they will become a separate branch.

What about the white tooth at the beginning of the month?

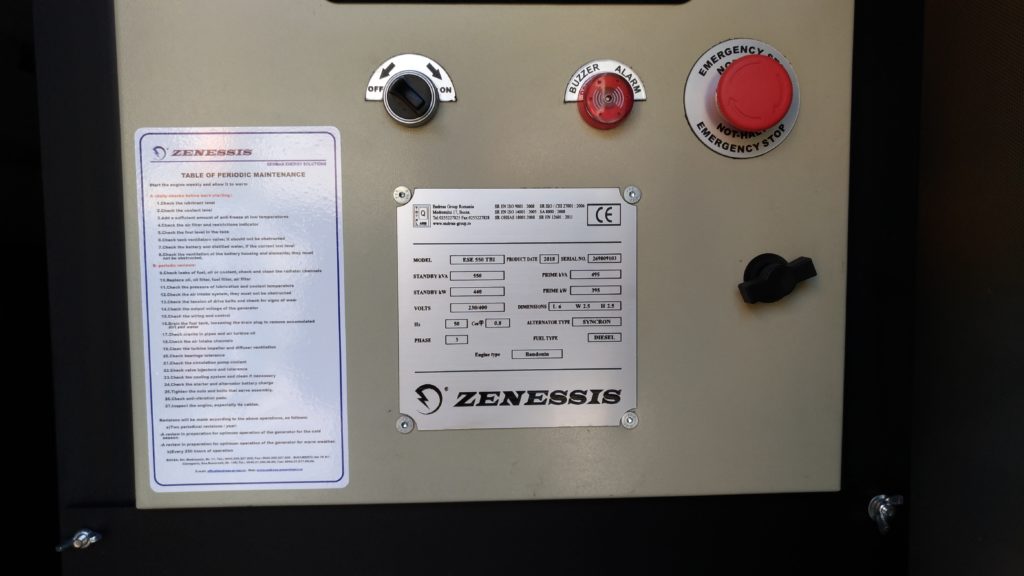

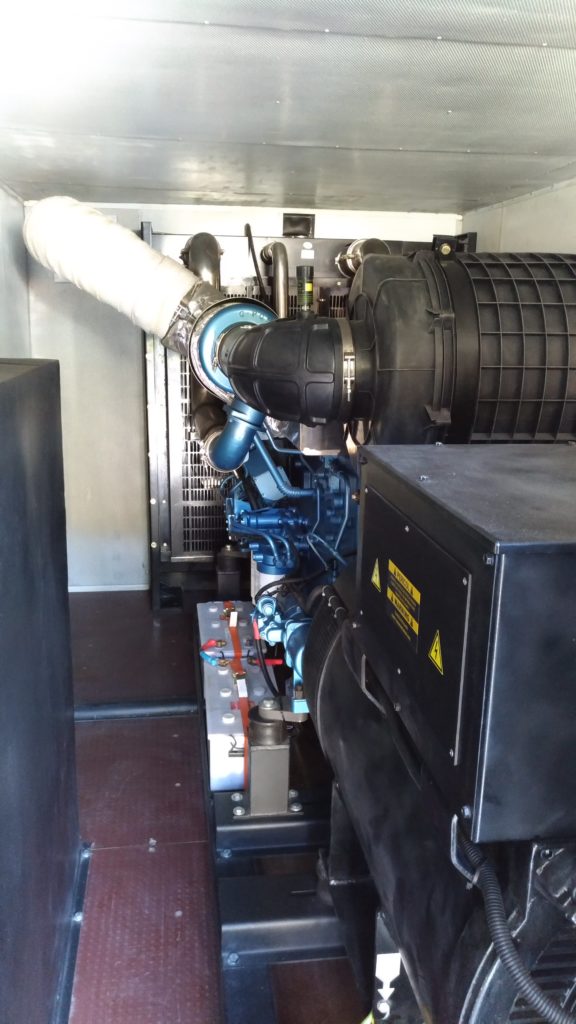

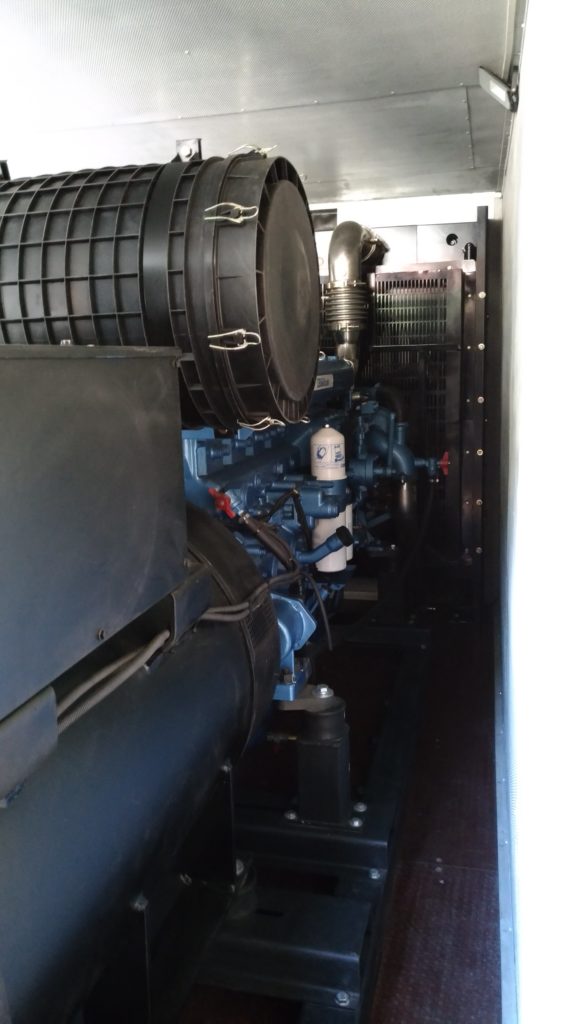

As part of the modernization and construction of DC2, we purchased five new motor-generators. Among them is a seven-and-a-half-ton 550 kVA, which alone will completely power the entire datacenter with everything it will ever contain 🙂

And just at the beginning of the month we had a stress test and tests to check what he can really do. We test the generators every week on a regular basis (always at the same hour, on the same day) and we do a real live test the first week of every month. We’ll disconnect the power and test that everything is working fine. The whole datacenter then runs for about 25-30 minutes on backup sources and we evaluate all variables that can be monitored. That way, we find “problems” before they become real problems…

Datacenter 1 now has 3 motor generators. Each of them can run the datacenter independently. Datacentre 2, which we are completing, has 5 motor generators. 3 inside the building and 2 on the roof. So everything is backed up several times. We’re serious about TIER IV.

We can do it efficiently and therefore the price of our services will not be affected by the increase in electricity prices

From the beginning, we have tried to use the most efficient cooling methods possible and have invested in more expensive, more efficient servers. As a result, the cost of electricity is only a fraction of a percent of our costs. If we had to pay 2x or even 3x more for electricity, we wouldn’t have to make it more expensive (except perhaps for action dedicated servers).

New technologies also play a part in this. Processors today can be very powerful and yet economical. They can reduce their consumption when you’re not using them.

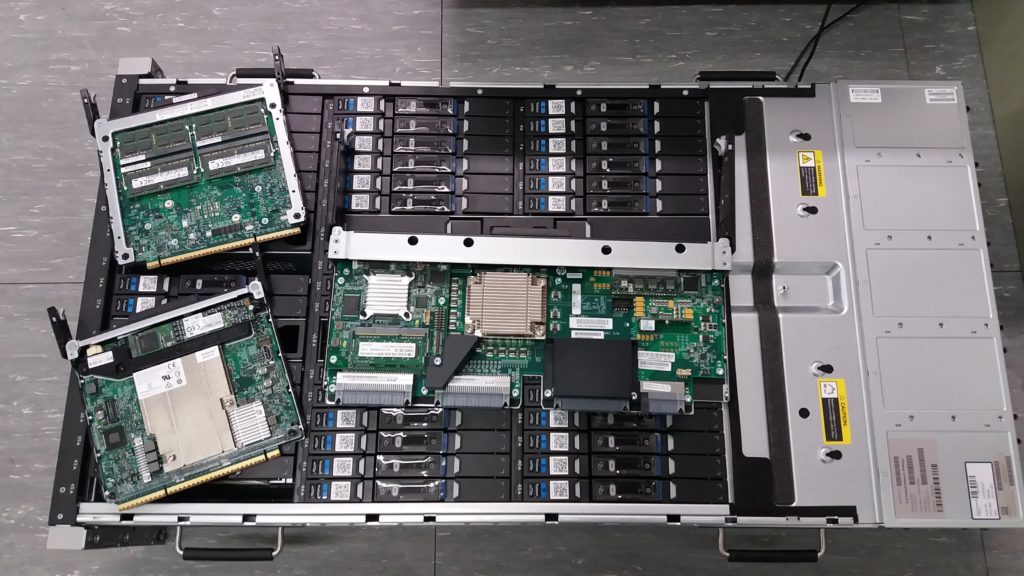

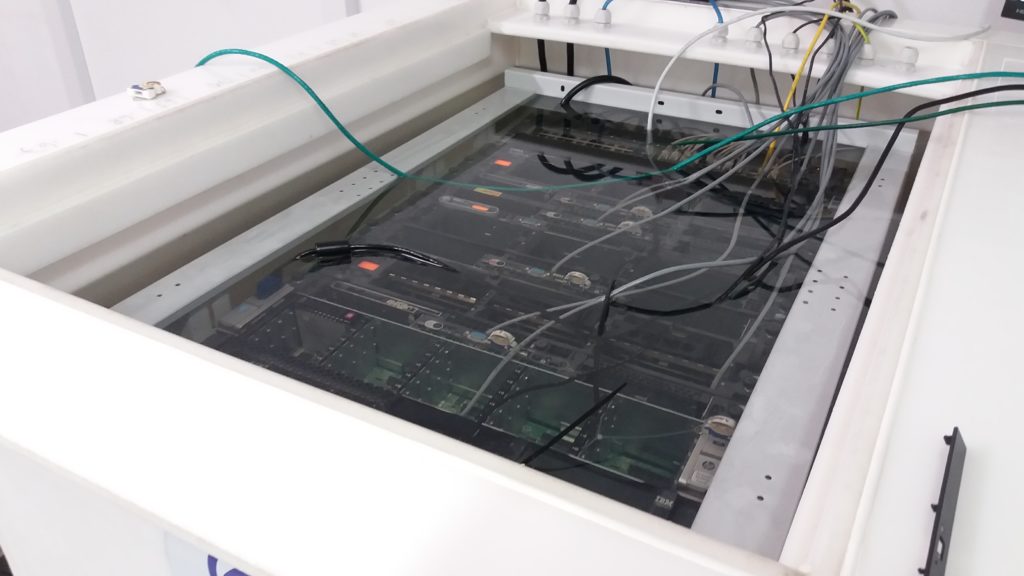

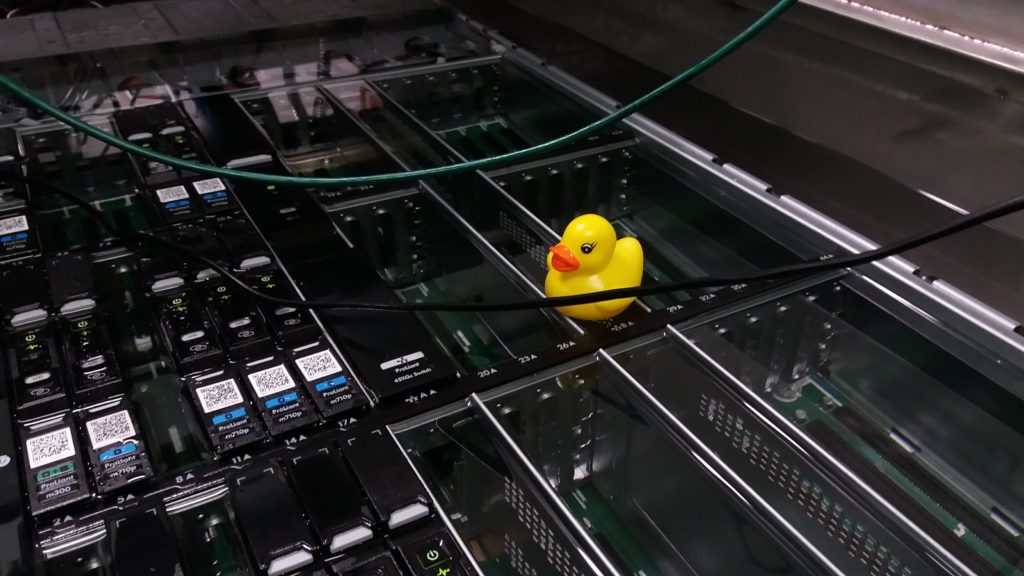

The HPE Moonshot concept, while heating like crazy, delivers far more power for less electricity. It’s 45 servers and 2 switches (each 4 x 40 Gbps uplink and 45 x 10 Gbps) using 4 common power supplies and 5 common fans. This concept will save tens of percent in energy costs. Combined with the extremely economical freecooling, the savings are even greater…

Of course, everything has a much higher purchase price, but it’s ultimately worth it.

So you don’t have to worry that we’ll go up in price just because of electricity 😉

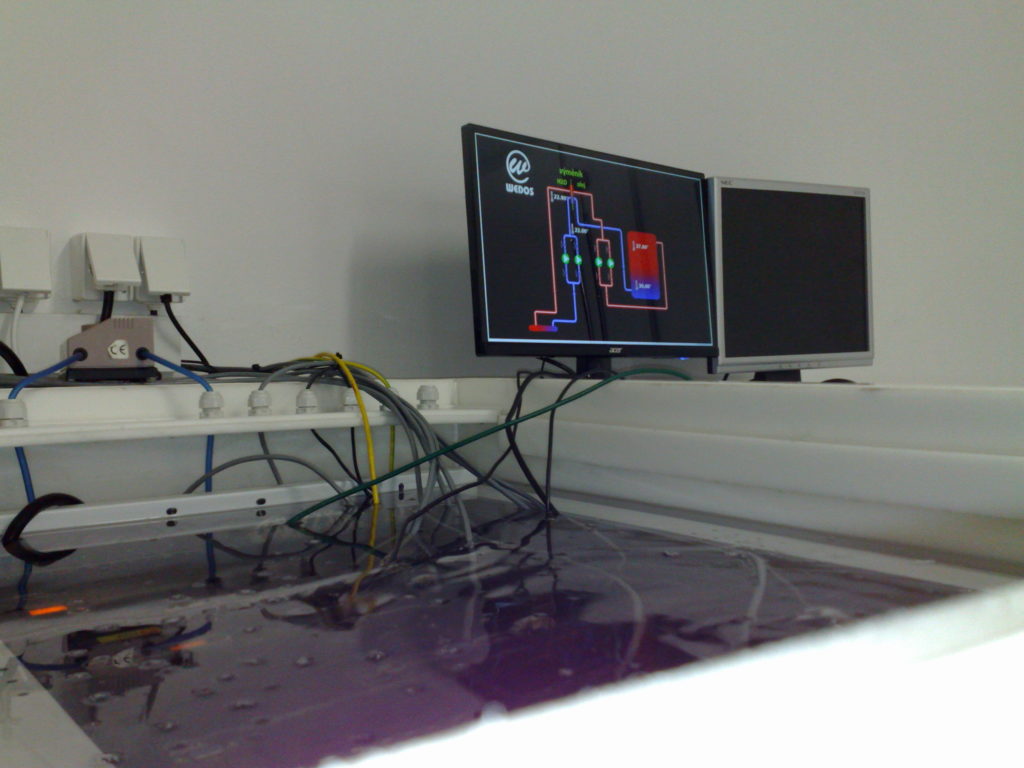

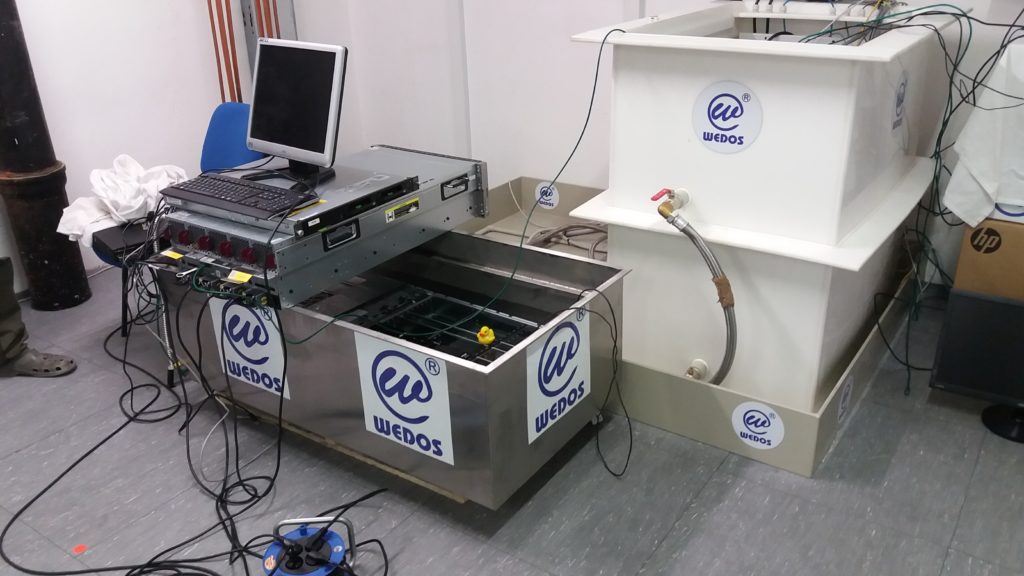

Oil cooling is the future

Once we start DC2, where the servers will be in an oil bath, the cooling costs will be a negligible amount. On the contrary. We will be able to further use the heat produced. In our case, we’ll heat our offices and heat the city pool. A full datacenter could heat “half” of Hluboká Nad Vltavou in winter.

Maybe one day we will see datacenters on housing estates that will heat apartment blocks in winter 🙂

Interesting fact at the end

By the way, all fans have to be removed from the servers that we sink in oil and this is an average of 30% energy saving. So we’re saving tens of percent on energy thanks to energy-efficient servers. Then we save another tens of percent of energy thanks to freecooling or save even more in oil cooling… But that’s not all. Waste heat heats both datacentres. We turned off the gas a few years ago and heat all our rooms with waste heat. In addition, the new datacenter will send the waste heat to heat the municipal swimming pool and to heat the neighboring building and even our outdoor parking lot…

It’s green, it’s economical…